Gerald McAlister | RGB Haptics | Beginner

Audio Haptics

This tutorial covers how to use RGB Haptics to add detailed haptics based on audio files. We’ll go over the basic settings you can utilize, the advanced settings available for audio based haptics, and some of the advanced features you can use to fine-tune the control over your haptics. Several examples are included in the RGB Haptics asset, and will be listed in the appropriate sections as we cover each featureset.

Basic Audio Haptic Setup

As we covered in the setup tutorial, the ContinuousHaptics script is the simplest way to play a haptic out of a controller when you touch an object. It is helpful for playing any haptics that need to be played from an object continuously. Other scripts can also be used to play haptics however, such as the the CollisionHaptics script. The important detail to note is that each of these scripts can utilize one of two types of haptics currently: Waveform or Audio. Since this tutorial covers Audio Haptics, we will of course focus on the Audio option, but the important note is that any haptic script can use audio as a source.

I mention this because it is important to be able to understand what settings are actually available for audio haptics. These settings are very simple: An audio clip to use for this haptic, and a synchronization source to ensure that the audio stayas in sync with any audio playing in the scene that you want. Other settings are not specific to audio, but will vary depending on what type of haptic script you are using.

Let’s go ahead and start with a very simple setup: create an empty scene with nothing more than a VR player and plane. We cover how to set this up in a tutorial on our website. Also ensure that you have attached the XRNodeHaptics script to each hand, setting them up appropriately as detailed in our setup tutorial. Now let’s go ahead and add a ContinuousHaptics component to the cube, attaching an AudioClip and enabling “Play On Awake” for it.

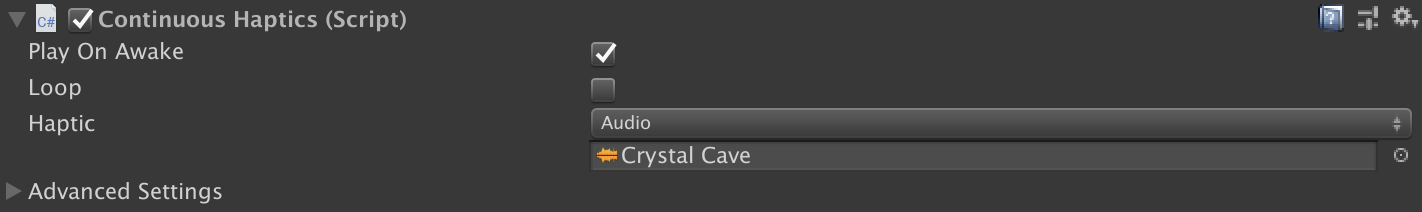

The ContinuousHaptics settings should look like this.

The ContinuousHaptics settings should look like this.

Upon playing this scene, you will feel the audio when you place your hand into the cube. We have included this as a sample scene in the “Audio Haptics” folder called “Basic” under the demo scenes with the asset. With this scene, you can only feel the haptics, not hear it playing. This is intentional, so that you can include audio based haptics without needing an audio source. At times, you may want both however, so let’s cover how to do that now!

Audio Syncing

For this section, we’re going to get our cube to not only emit haptics, but to also play the audio so you can hear it. On the surface, this seems simple: We can simply add an AudioSource component to our cube, set our AudioClip to the same as our ContinuousHaptics, and ensure “Play On Awake” is enabled too. However, you may quickly notice that sometimes the audio you hear and the haptics you feel become out of sync. This is normal and expected!

The problem is that Unity plays back audio on a separate thread, while we playback our haptics on the main thread. This is required for us since we ultimately cannot access Unity’s XR callbacks on a separate thread as of this moment to our knowledge. As a result, we need some way to synchronize our AudioSource with our ContinuousHaptics. To that end, you can set the “Sync Audio Source” to the newly created AudioSource to ensure that these are synchronized! You can find this under the advanced settings.

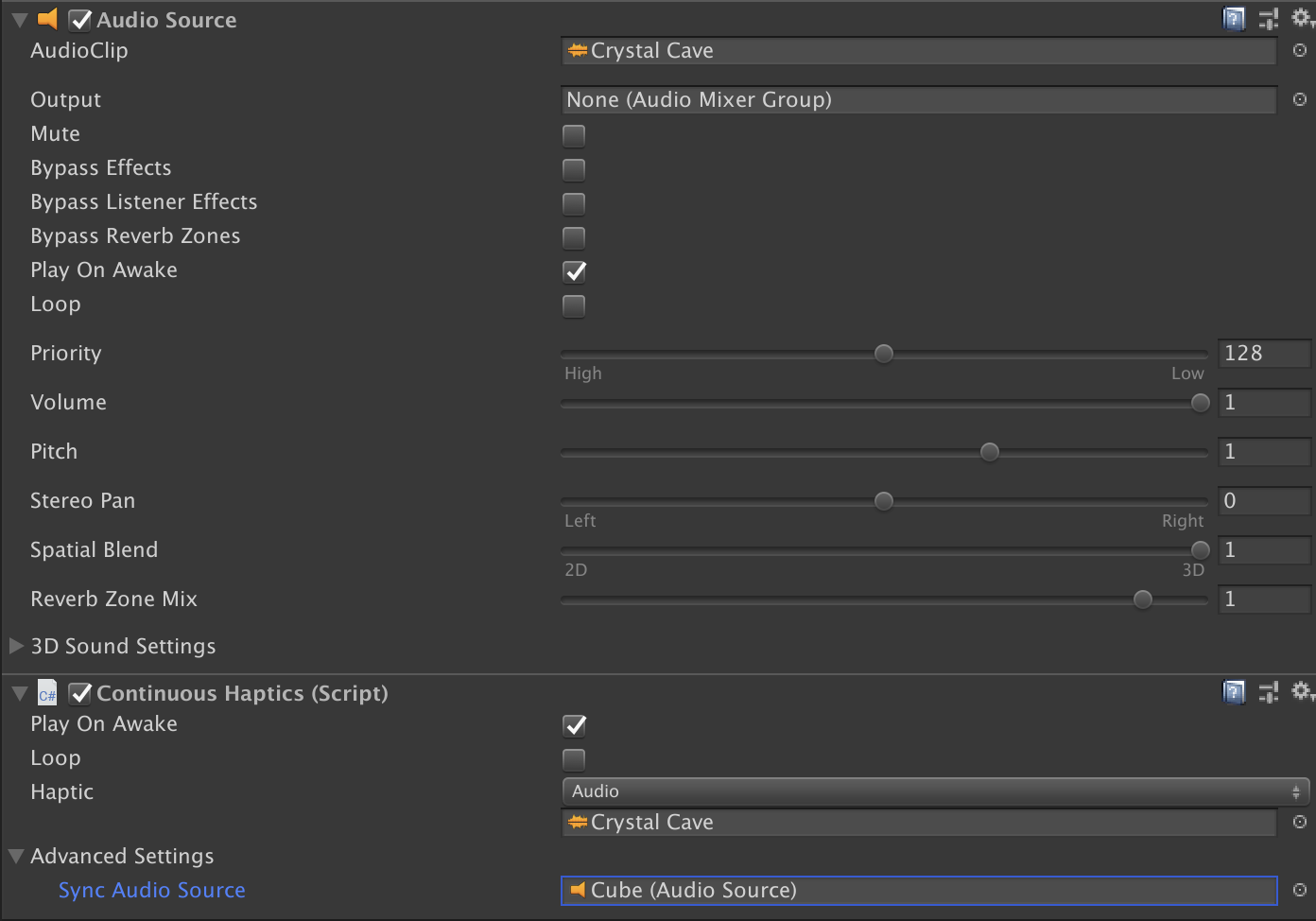

Your new components should look like this now.

Your new components should look like this now.

When you play this scene now, you should now feel the haptics in sync with what you hear from the audio! We’ve included a sample scene for this in the “Audio Haptics” folder called “Syncing”. Setups like this are great for objects in a scene that play a sound the player should feel when they touch it. You can similarly change the audio file in use to provide a different type of haptic with the audio too. For example, if you have an old-school boombox in a game, you might want an audio file of only the bass of a soundtrack playing from the haptics, while the audio source plays the full audio. With RGB Haptics, you can do this by just swapping them!

Scripting Controls

At this point, everything we have done has been through Unity’s Editor. While this is great for setting up some simple haptics, there are many instances where you will probably want to have more fine-grained playback control for haptics in your code however, so let’s talk about how this setup works. For audio haptics, you can control the majority of its settings from your code.

Let’s start with a simple task: Let’s switch between two different AudioClip whenever the spacebar is pressed. Create a new script called HapticClipSwitcher and add the following code to it:

using UnityEngine;

using RGBSchemes;

public class HapticClipSwitcher : MonoBehaviour

{

public AudioClip AudioA, AudioB;

public AudioSource Audio;

public ContinuousHaptics AudioHaptic;

/// <summary>

/// Check if clipB is playing.

/// </summary>

private bool mClipB;

// Update is called once per frame

void Update()

{

if (Input.GetKeyDown(KeyCode.Space))

{

// Stop playing everything.

Audio.Stop();

AudioHaptic.Stop();

// Change out the clips.

AudioHaptic.Haptic.SetHapticSource(mClipB ? AudioA : AudioB);

Audio.clip = mClipB ? AudioA : AudioB;

// Start playing again.

Audio.Play();

AudioHaptic.Play();

// Flip our clip.

mClipB = !mClipB;

}

}

}

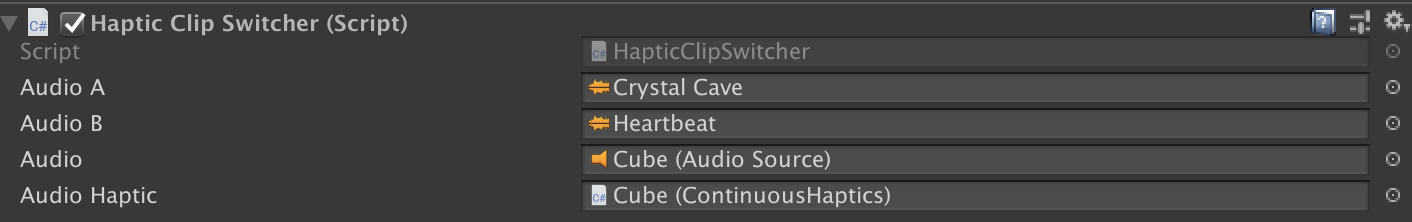

Go ahead and add this to your player’s object as a new component, and configure the two clips with different AudioClips, then also make sure that you set the Cube GameObject as the Audio and Audio Haptic sources. It should look something like this:

Two AudioClips and our Haptic source.

Two AudioClips and our Haptic source.

The code above is pretty simple, so let’s run through what’s happening in it very quickly. First, we have two AudioClips that we add as properties. These will be used to set our AudioSource clip and ContinuousHaptic clip. We use a boolean to toggle between which ne we should use. When the user presses the space bar, we simply stop our audio from playing from both sources, swap the clips, start playing again, then flip our clip toggle. Pretty straightforward!

A couple of things to keep in mind about this example however: First, note that we update both the haptic clip and the audio clip. This is because we have set them to be synchronized, and so the lengths of both clips must be the same! If you decide to only change the haptic, you’ll want to make sure that your new clip is either the same length, or that you have not setup the synchronization like we did previously.

When you play this scene, you can press the space button and see each get updated as you would expect! This scene is also included as a demo, once again in the “Audio Haptics” folder with the name “Scripting”. Just as you can Stop and Play a haptic clip, you can also Pause a haptic clip. To resume it when paused, you can use the Play method as you normally would. The API is purposefully kept similar to how AudioSources are setup, in order to allow you to easily match what methodo calls you need to make to both haptics and audio.