Gerald Mcalister | February 9, 2017 | Dev Diary

Oculus Touch and Finger Stuff Part 1

Our first developer diary covered how to use the HTC Vive to create and use a set of hands in virtual reality. Today, we want to expand that functionality to the Oculus Rift, and discuss how you can add finger controls to your game as well. This will be a completely separate tutorial from our HTC Vive version, so no need to download the project from there, but it will focus specifically on how to do this in Unity. We may cover how to do this in other setups in the future, but we want to start with Unity since there a lot of indie developers who are starting with it. Today, we’ll start with part one of setting everything up, so with that, let’s dive in to using Oculus Touch in Unity! Edit: Part two is now available as well!

Initial Setup

To get started, we need to go ahead and create a simple Unity project like we discussed in one of our previous developer diaries. Specifically, we need to create a simple Oculus Rift project, with just a simple floor (No need to add anything else yet). Once you have your project setup, we will want to download one more SDK for Unity from Oculus: The Avatar SDK. This SDK allows you to create a virtual representation of the player in virtual reality. This is very important as it will be what we actually show the user for their hands, and will handle all of our needed hand tracking for us.

The Avatars SDK

You can download the Avatar SDK from here by clicking on “Details” next to it, accepting Oculus Terms and Conditions, then clicking download. Once you have that downloaded, you can go ahead and extract the archive, and open it up. You should see a Unity folder with a file called OvrAvatar.unityPackage. Take that file and drag it into your Unity project to import its assets. This will give you a new folder called “OvrAvatar” in your Assets folder. Within this folder, there should be a “Content” folder, expand that and find the “Prefabs” folder within it. You should see two assets in it: LocalAvatar and RemoteAvatar. We want to focus on LocalAvatar, as this is what we will use to display

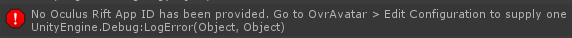

Go ahead and drag the LocalAvatar into your project (as its game object, not under the OVRCameraRig in your scene). Go ahead and run your project (make sure your Touch controllers are on). You should immediately be able to see your hands in your app. This should include the ability to use your fingers and see them in virtual reality. Note that you will see an error saying you must supply an Oculus Rift App ID for your project. You can ignore this for testing, but will need to add one before you can create a final build. Even so, you won’t be able to interact with anything quite yet, as your hands do not have any collisions associated with them. For this, we can go ahead and utilize some of the code from our previous tutorial to add these interactions into our demo.

Grabbing Objects

At this point, much of this tutorial will be the same as our previous one on hand interactions with the Vive. In this case, let’s start by adding Sphere Colliders to the LeftHandAnchor and RightHandAnchor objects under the OVRCameraRig. You will want to set the radius of them to 0.075 as this seems to be the best size for them with respect to the hand models. Then check the “Is Trigger” checkbox on them as well. This will ensure that when the hands collide with something that they are able to detect the object only on entry and exit of the collision. Finally, add a a Rigidbody to each hand, deselecting the “Use Gravity” checkbox and selecting the “Is Kinematic” checkbox, that way the player can move the hands around themselves.

At this point, let’s go ahead and create a new script called Hand.cs (again, this is very similar to the previous tutorial for the HTC Vive). Paste the following into your Hand.cs’ class:

public enum State {

EMPTY,

TOUCHING,

HOLDING

};

public OVRInput.Controller Controller = OVRInput.Controller.LTouch;

public State mHandState = State.EMPTY;

public Rigidbody AttachPoint = null;

public bool IgnoreContactPoint = false;

private Rigidbody mHeldObject;

private FixedJoint mTempJoint;

void Start () {

if (AttachPoint == null) {

AttachPoint = GetComponent<RigidBody>();

}

}

void OnTriggerEnter(Collider collider) {

if (mHandState == State.EMPTY) {

GameObject temp = collider.gameObject;

if (temp != null && temp.layer == LayerMask.NameToLayer("grabbable") && temp.GetComponent<Rigidbody>() != null) {

mHeldObject = temp.GetComponent<Rigidbody>();

mHandState = State.TOUCHING;

}

}

}

void OnTriggerExit(Collider collider) {

if (mHandState != State.HOLDING) {

if (collider.gameObject.layer == LayerMask.NameToLayer("grabbable")) {

mHeldObject = null;

mHandState = State.EMPTY;

}

}

}

void Update () {

switch (mHandState) {

case State.TOUCHING:

if (mTempJoint == null && OVRInput.Get(OVRInput.Axis1D.PrimaryHandTrigger, Controller) >= 0.5f) {

mHeldObject.velocity = Vector3.zero;

mTempJoint = mHeldObject.gameObject.AddComponent<FixedJoint>();

mTempJoint.connectedBody = AttachPoint;

mHandState = State.HOLDING;

}

break;

case State.HOLDING:

if (mTempJoint != null && OVRInput.Get(OVRInput.Axis1D.PrimaryHandTrigger, Controller) < 0.5f) {

Object.DestroyImmediate(mTempJoint);

mTempJoint = null;

throwObject();

mHandState = State.EMPTY;

}

break;

}

}

private void throwObject() {

mHeldObject.velocity = OVRInput.GetLocalControllerVelocity(Controller);

mHeldObject.angularVelocity = OVRInput.GetLocalControllerAngularVelocity(Controller).eulerAngles * Mathf.Deg2Rad;

mHeldObject.maxAngularVelocity = mHeldObject.angularVelocity.magnitude;

}

This may look intimidating, but let’s go ahead and break it down. If you’ve already done this for the HTC Vive in our previous tutorial, the first section should look very familiar to you. We start by creating a State enumerator to hold the possible states for our hand. Next, we have an OVRInput.Controller object. This is key, as it signifies which hand the controller represents. This is crucial to set, as Oculus differentiates each hand, so make sure you change this for each hand in the Unity Inspector window. Next, we create a hand state variable to adjust internally, though we leave it public so we can check in other scripts if the player is holding something. Then we create an AttachPoint variable. This is used to determine where the objects we pick up should be attached to. By default we will attach it to the hand itself, though you can change this to be another object as well in your app. After that, we have the “Ignore Contact Point” option, to allow you to pick up the object and have it stay on your hand based on where you pick it up, or to center the object a player picks up on their hand. After that, we create temporary variables for the object we are holding, and a temporary joint for moving said object.

After this, we have our Start() method. Upon the hands’ initialization we will get the rigid body component within it. This will be used as an attach point for our hands when we pick up objects, if we have not specified an attach point in the Inspector view. We then have our OnTriggerEnter() and OnTriggerExit() methods. The first method handles when a hand collides with an object. It checks that we do not have something in this hand already, and then ensures that the object is on the grabbable layer and has a rigid body component attached to it. We then store it in our held object and change the hand state to touching. OnTriggerExit does the opposite of this, instead checking that we were not holding an object, and that the object we are no longer touching was on the grabbable layer. We then set the held object to null and set the hand state to empty.

After this, we have our Update() method. This is where we actually handle picking up and dropping objects. To start, we begin with handling the TOUCHING state first. If there is no temporary joint and the player is pressing down on the hand trigger with enough pressure (>= 0.5f in this case), we set the held object’s velocity to zero, and create a temporary joint attached to it. We then connect that joint to our AttachPoint (by default, the hand itself) and set the hand state to HOLDING. If the hand state is already in the HOLDING state, we check that we do have a temporary joint (i.e. that it is not null) and that the player is releasing enough of the trigger (in this case, < 0.5f). If so, we immediately destroy the temporary joint, and set it to null signifying that it is no longer in use. We then throw the object using a throw method (described further below) and set the hand state to EMPTY.

The throw method is a bit tricky, but works for the most part. That said, it currently has a bug in it where the values for the angular velocity are clamped to [0, 2 * Pi]. What this means is that when you throw an object, the rotational direction can sometimes be incorrect based on how it is thrown. Oculus is currently working on a fix for this as of December, so we are keeping this bug in as it should resolve itself with later SDK updates. For now what we do, is we begin by setting the held object’s velocity to the controller’s velocity. We then set the angular velocity to the controller’s angular velocity as well. Finally, we set the maximum angular velocity to the magnitude of the angular velocity, ensuring that the rotation does not go to crazy.

At this point, the hand script should be set to go. To test it, go ahead and attach the script to each Hand anchor with the other objects. Ensure that the Controller dropdown is properly set for each hand (“L Touch” for Left hand and “R Touch” for Right hand). Next, add a cube to your scene and add a Rigidbody to it. Ensure that it is on a layer called “grabbable”, and then everything should be ready to go!

The Final Build

At this point, you probably want to do a final build to record some quick footage of what you made! If you’ve gone ahead and done this already, you’ll may notice notice that while the game runs just fine, you can’t actually see your hands! This is due to a bug in the Avatar SDK where currently you need to export several shaders as a result. On the downloads page for the Avatars SDK there is a comment that talks about how to fix this. If you are still having issues seeing the Avatar however, ensure that you have done a 64-bit build, as the needed libraries from the Oculus Runtime do not support x86.

Part two will be posted next week where we will cover our method of adding finger interactions to a scene, as well as how to load a player’s Avatar rather than the default one provided by Oculus. For now, we leave you to experiment with this setup! Have any questions? Feel free to post them below, and don’t forget to like and share if you enjoy our developer diaries!